The hype around Artificial Intelligence (AI) has been so dramatic that it feels like it should be incredibly complex to implement and require a dedicated team to do so. If you want to build a competitor to OpenAI, that may be true. But chances are, you’re not in the AI business. If you want to add an AI feature to your existing site—such as using large language models (LLMs) like ChatGPT to allow users to chat with an AI to solve problems, using image generators like DALL-E, or adding speech-to-text or audio captions—it really doesn’t take a lot of work. These types of AI can be easily integrated into your existing sites, even if you’re mired in legacy code.

In a recent project, we integrated OpenAI’s GPT into a pre-existing eLearning site with minimal code, little overhead, and no strain on existing infrastructure. Total development time was just over two weeks from start to production.

Backend Implementation with Emma

Integrating ChatGPT as a Separate Instance

To allay some trepidation from the standpoint of integrating ChatGPT into an existing site, it’s just an API—and a rather simple one at that. You have an API key, get input from the user in the form of a message, send it to the API, and return the API response to the user. It really is that simple—more or less. In our eLearning project, the backend was just over 500 lines of code. Easy peasy, lemon squeezy.

If there’s one caveat to the GPT API, the API is really meant to be run on the backend and not the frontend—there are obviously exceptions. While your site won’t be the one crunching data (that’s the API’s job), it still has to wait for a response. And if you’ve used ChatGPT, you know that responses can sometimes take a hot minute. In order to alleviate strain on existing infrastructure, we typically would recommend spinning up a separate server instance for AI integration. An exception might be when your user base is small, like adding a feature for admin users only. While a separate instance may seem like a drawback, in the sense of being more work, it’s actually an advantage twice over. First, building a separate instance means the code isn’t going to affect your existing codebase—which also means, conversely, changes to your existing codebase won’t affect the AI instance. Second, your existing server load won’t be affected, so any issues or unexpected spikes in usage are entirely contained.

Customizing for Use Case

If you’re thinking about using AI on your own site, you might be wondering how to customize an AI to suit your needs. You might be thinking about complex training and fine-tuning datasets, but chances are you probably don’t need something so involved. And at this time, fine-tuning isn’t even available on GPT-4 or GPT-3.5-Turbo

If what you’re looking for is a chat interface where the LLM is aware of the context of the chat and what it will be discussing with your users, you can simply tell it as much. The OpenAI chat API allows for “system” messages that you can programmatically add to a chat dialogue in order to give instructions to the AI. Do you want an AI that can talk to customers about tires? Instruct the LLM, “You will be helping me, a customer or potential customer, answer questions about tires.” You’ll definitely need to play around with different prompts to find what actually works for your use case.

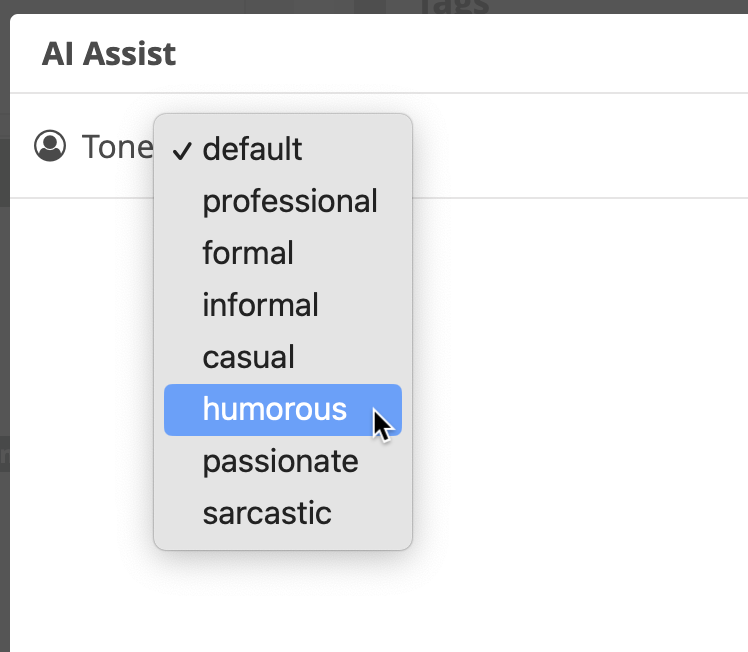

With system messages in mind, writing features into your app becomes much easier. For the eLearning project, the chat interface we created was for use by admins to generate educational content. Because the LLM content might be directly or indirectly used to populate educational material, we wanted the user to easily be able to switch to different tones of voice. While the user could tell the AI to respond in a different voice, this may not be immediately obvious to the feature’s audience, and finding the right phrasing to use to consistently produce the right results

We added a dropdown feature to switch between voices such as “formal,” “casual,” “humorous,” and “sarcastic.” Depending on the user’s selection, our backend added a system message such as, “Respond in a humorous, but professional tone.” Voila! Feature complete.

Frontend Implementation with Philip

Considering Interfaces of a Chatbot

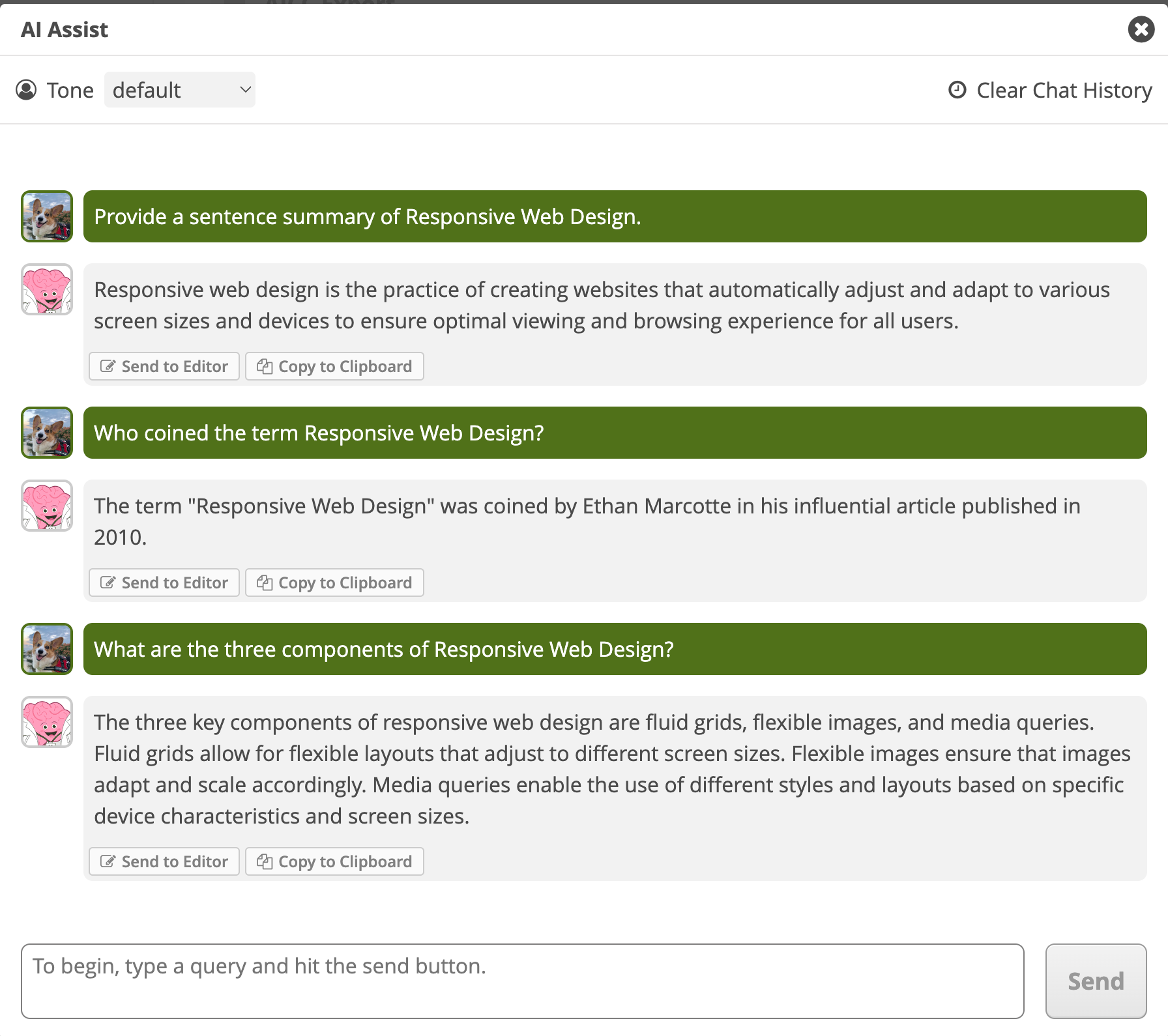

Users of ChatGPT are likely familiar with tools like text messaging via their phone, Slack, or Microsoft Teams. AI features like the one we created for the eLearning project often use this “chat” metaphor, even down to the structure of API calls. It made sense to build the design of our UI on this same “chat” metaphor.

With the screen real estate afforded and the sheer size of responses that can come from the LLM, we decided to lean toward a Slack-style messaging approach. We also had an existing brand and UI elements that we needed to consider as we worked with our client. The design approach displays the conversation in a stacked and reverse chronological pattern–where the newest message is at the bottom. The message from the user is displayed on a brand background color with white text and the LLM’s responses are on a light gray background with dark gray text. This combination, along with an image next to the message on the left side, provides visual context clues about who provided which message and response.

Due to the nature of the LLM interaction, a response is necessary for the benefit of the user. So while the user is awaiting a response from the LLM, the user’s chat interface is disabled until the response is received and printed in the chat window.

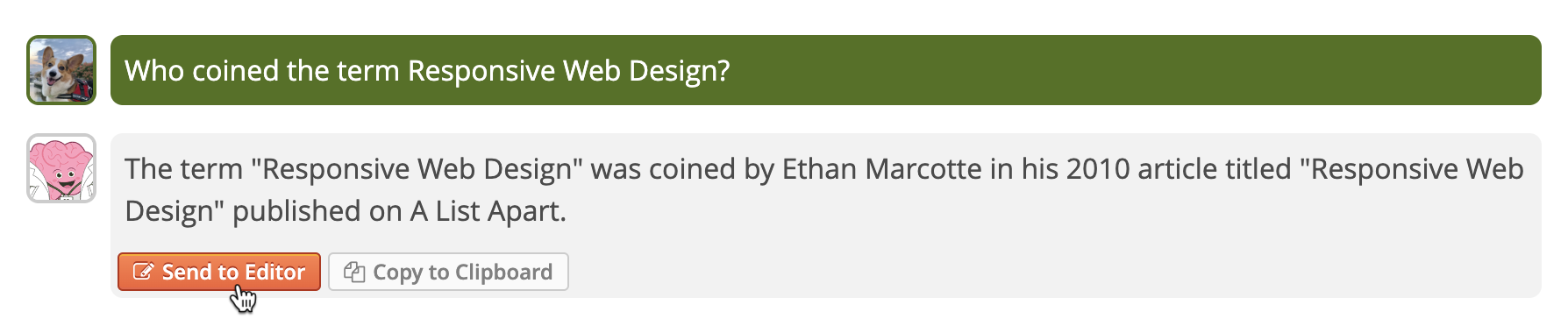

The main purpose of this tool is to aid an instructor in creating content for their learners on the site. Each message that is sent back from the LLM is content the instructor may want to add to their content editor. To accomplish this interaction, we added a button to each received message that allows the instructor to send the full message directly into their CKEditor instance. Upon hitting that button, the chat modal closes, and the text is added to their editor.

Storing and Managing an AI Conversation

It’s important to remember that AI isn’t “smart” on its own. The LLM does not remember a conversation, although the interface feels like that is the case. Instead, each time a new message is sent to the LLM in that chat instance, the whole conversation is resent as well (just like ChatGPT). After being given the whole conversation, the LLM provides a response to the most recent message and then promptly forgets the whole conversation.

Due to this send-and-forget nature of LLMs, we have to keep track of all this data as a part of the user interaction and plan for contingencies. Additionally, we have to decide how and when a conversation should be persisted between instances or restarted. We chose to use the localStorage API in order to store the active conversation. Each time a message is sent or received it is stored in this localized database, allowing our API calls to the LLM to have the whole conversation allowing the user to have a messaging-like experience.

The dashboard of our eLearning app has multiple instances of the CKEditor. Our persistent data strategy allows for a conversation to start in one editor instance but then continue in another editor instance. This allows the instructor to share the same message from the LLM in multiple editors quickly. However, with this persistence, the user may need the conversation to start fresh. We added a “Clear Chat History” button to remove that persistent data and clear the localStorage database. This allowed persistence to be a top feature with control given to the instructor to define when a new conversation happens.

Inspiration and New Ideas

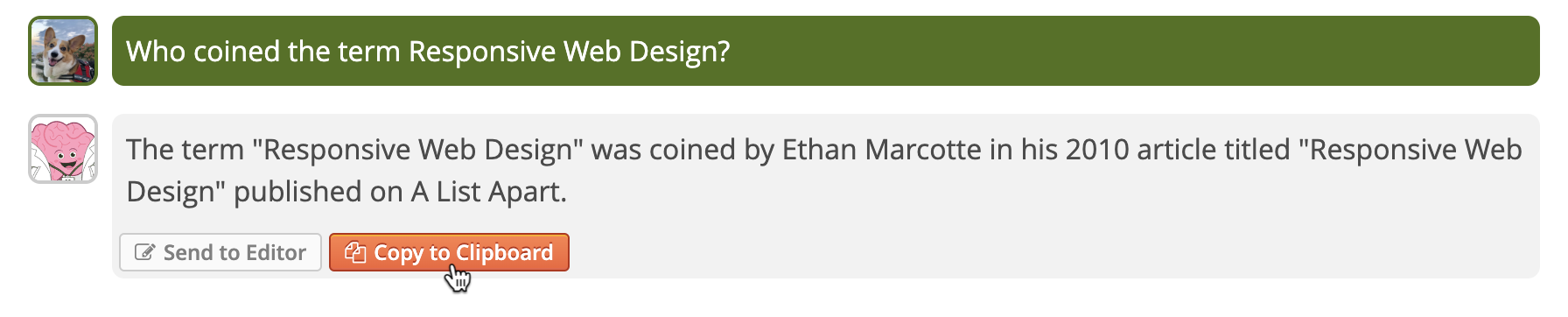

As we worked on building out key features of the interface, new ideas and interactions precipitated in response. Our primary feature of adding the response from the LLM to the editor started off as working on only the most recent message, but we quickly recognized the use in importing previous messages. Once that feature was complete, it occurred to us that this tool may be the instructor’s primary interaction with an LLM utility, and they may want to use this information outside the editor. Next to our “Send to Editor” button, we added a “Copy to Clipboard” to each response message from the LLM. This allows the instructor to extract information from the tool into areas outside our editor.

Conclusion

The opportunities this project allowed us were inspiring for our team and we love the final product. With this just being the initial MVP, we have other ideas we would love to explore using AI for this eLearning client in the future. One idea is to add a whole conversation to the editor, as well as copy the whole conversation. Another idea is to provide more interfaces to rerun a message.

The industry enthusiasm and trepidation around everything AI is overwhelming. While there’s some misunderstanding about what “AI” really means in a contemporary context, there are AI tools that can do incredibly cool things. And contrary to what the hype may lead you to believe, these tools are already right at your fingertips—just an API integration away.

Definitions

With “AI” being relatively new and the hype being relatively high, here are a few terms used in the article:

AI - Artificial Intelligence Historically, AI meant machine intelligence. Contemporarily, it is an umbrella term for code that utilizes “learning” in order to make “decisions.” “Learning,” in this context, means training a program on a (very) large dataset. “Decisions” does not imply thinking, but rather utilizing the resulting data from training in order to generate a response that aligns with the training data.

LLM - Large Language Model LLMs use a deep learning algorithm to train on text data such as books, articles, and web pages. The resulting model can relatively accurately generate a response that aligns with a query (user input) based on the training data. The result usually appears as though the LLM is thinking or solving a problem when, in reality, it’s simply computationally generating a response based on patterns and connections between words and phrases.

OpenAI An AI research lab and for-profit subsidiary corporation that owns ChatGPT and DALL-E, among other AI assets. OpenAI offers paid APIs for their AI assets.

ChatGPT An LLM chatbot that is trained and programmed to generate natural language responses to input queries. It has a simple paid API.

DALL-E A deep learning model that generates images based on input text.